Post Graduate Program In AI And Machine Learning, Mumbai

Ranked No.1 AI and Machine Learning Course by TechGig

Put your career in the fast lane with our extensive AI and ML Course in Mumbai, in collaboration with Purdue University and IBM. Simplilearn’s AI and ML certification involves a cutting-edge curriculum, higher live interaction, masterclasses, hackathons, and hands-on industry projects to give you a rich learning experience. Become an AI expert today with our AI Course and increase your marketability in this domain.

- Admission closes on 25 Apr, 2024

- Program Duration 11 months

- Learning Format Online Bootcamp

World’s #1 Online Bootcamp

Why Join this Program

Purdue’s Academic Excellence

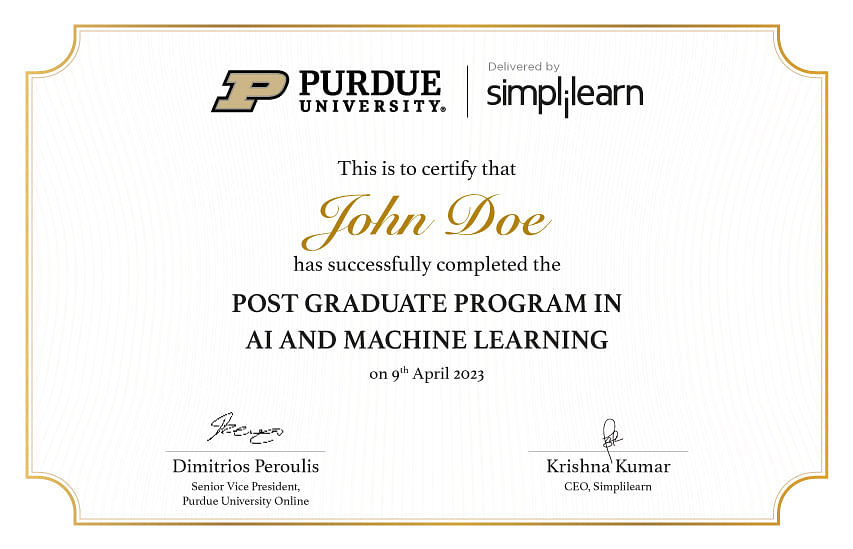

Program completion certificate from Purdue University and Simplilearn

IBM’s Industry Prowess

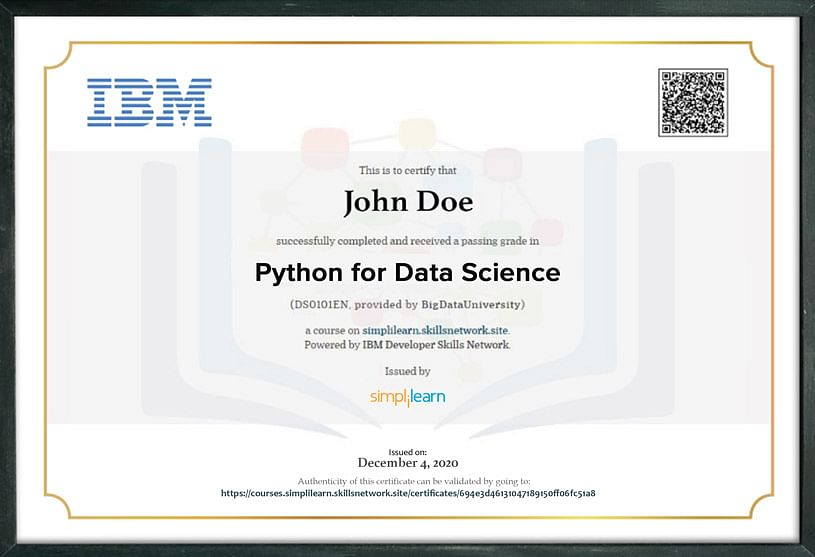

Obtain IBM certificates for IBM courses and get access to masterclasses by IBM experts

Exposure to latest AI trends

Live online classes for generative AI, prompt engineering, explainable AI, ChatGPT, and much more

Hands-on Experience

Gain experience through 25+ hands-on projects and 20+ tools with seamless access to integrated labs

Fast-track Your Career

After completing the course, Simplilearn learners have made successful career transitions, boosted career growth, and got salary hikes.

Learner’s achievements

Maximum salary hike

150%Average salary hike

70%Hiring partners

2900+Our Alumni In Top Companies

Career Growth Stories

I am Sidharth Pandey working as a UI/UX expert working in this industry for 12 years. I was looking for new challenges when I came across Simplilearn’s AI and Machine Learning program. Being passionate about technology and as the job demanded to use of AI and ML concepts drove me towards the PG AI and Machine learning program. I have a message to share with the global community. This is the time to enhance your skills and knowledge

- Sidharth PandeySoftware Engineer UI/UX Expert

UI/UX Expert

Machine Learning Course in Mumbai Overview

The AI and ML Course in Mumbai includes Python, reinforcement learning, statistics, NLP, machine learning, and deep learning networks. You will learn how to deploy deep learning models on the cloud via AWS SageMaker, build Alexa skills, and access GPU-enabled labs with this AI and ML Certification. You can also explore AI tools like TensorFlow, Scikit-learn, OpenAI Gym, and more.

Key Features

- Program completion certificate from Purdue University and Simplilearn

- Live-online masterclasses delivered by Purdue faculty and IBM experts

- Live interactive sessions on the latest AI trends, such as ChatGPT, generative AI, prompt engineering, and more

- Core curriculum delivered in live classes by industry experts

- Simplilearn's JobAssist helps you get noticed by top hiring companies

- Gain exposure to ChatGPT, OpenAI, Dall-E, Midjourney & other prominent tools

- 3 capstones and 25+ hands-on projects from various industry domains

- Access to Purdue’s alumni association membership on program completion

- Exclusive hackathons and Ask Me Anything sessions by IBM

| Key Features |

|---|

| Program completion certificate from Purdue University and Simplilearn |

| Live-online masterclasses delivered by Purdue faculty and IBM experts |

| Live interactive sessions on the latest AI trends, such as ChatGPT, generative AI, prompt engineering, and more |

| Core curriculum delivered in live classes by industry experts |

| Simplilearn's JobAssist helps you get noticed by top hiring companies |

| Gain exposure to ChatGPT, OpenAI, Dall-E, Midjourney & other prominent tools |

| 3 capstones and 25+ hands-on projects from various industry domains |

| Access to Purdue’s alumni association membership on program completion |

| Exclusive hackathons and Ask Me Anything sessions by IBM |

AI & ML Course In Mumbai Advantage

Our curriculum equips you with the skills and knowledge to excel in your career. With a structured learning approach and industry-relevant projects, you will be able to tackle complex challenges and stay at the forefront of this field.

Partnering with Purdue University

- Receive a joint Purdue-Simplilearn certificate

- Masterclasses by Purdue faculty

- Purdue University Alumni Association membership

Program in Collaboration with IBM

- Industry recognized IBM certificates for IBM courses

- Masterclasses from IBM experts

- Access to Ask Me Anything sessions and Hackathons

Artificial Intelligence Course Details

Through the various modules provided in this carefully curated AI and ML course, you will be on your way toward a successful career in AI and ML. You will also explore some of the latest advancements in the AI space such as ChatGPT, generative AI, and explainable AI among others

Learning Path

Discover the principles of automation, analysis, and much more from the AI ML Course, which is offered in collaboration with Purdue University. Begin your AI adventure by taking the AI and ML course on statistics and intelligent computing.

- AI areas: generative AI, prompt engineering, and ChatGPT.

- Practical learning: Gain hands-on experience to utilize these technologies

- Focus on prompt engineering: Understand its significance in producing specific outputs.

- Comprehensive insights: Learn how to leverage AI advancements for practical business solutions.

- Procedural & OOP understanding

- Python's Advantages Exploration

- Jupyter Notebook utilization

- Implementing identifiers, indentations, comments

- Python data types, operators, strings

- Mastery of Python loops

- OOP principles comprehension

- Methods, attributes, access modifiers insight

- IBM-designed course on Python for data science.

- Python scripting proficiency.

- Hands-on data analysis using Jupyter Lab.

- Python fundamentals emphasized: strings, Lambda functions, and lists.

- NumPy for array manipulation

- Linear algebra basics

- Statistical concepts (central tendency, dispersion, skewness, covariance, correlation).

- Hypothesis testing methods covered: Z-test, T-test, ANOVA, and pandas data manipulation.

- Data visualization skills acquired: Matplotlib, Seaborn, Plotly, and Bokeh libraries

- Studying the machine learning pipeline.

- Supervised learning: regression, classification.

- Experience in unsupervised learning: clustering and ensemble modeling.

- Evaluation of TensorFlow, and Keras frameworks.

- Hands-on with PyTorch for recommendation engines.

- Comprehensive understanding of ML approaches & applications.

- Elevate ML skills with deep learning.

- Learn TensorFlow and Keras.

- Proficiency in deep learning concepts.

- Construct artificial neural networks.

- Navigate data abstraction layers.

- Unlock data potential for AI advancements.

- Explore deep learning vs. machine learning.

- Fundamental concepts & applications covered.

- Topics include neural networks, propagation, TensorFlow 2, and Keras.

- Performance enhancement & model interpretability techniques.

- CNNs, transfer learning, object detection.

- RNNs, autoencoders, PyTorch neural networks.

- Effective building & optimizing models with Keras, and TensorFlow.

Implement the skills you will gain throughout this program in this capstone project. You will solve industry-specific challenges by leveraging various AI and ML tools and techniques learned in the program modules. This project will help you showcase your new expertise to potential employers.

Electives:

Attend an online interactive masterclass and get insights about advancements in technology/techniques in Data Science, AI, and Machine Learning.

Attend these online live sessions delivered by industry experts to gain insights about the latest advancements in the AI space. Some of the areas and concepts covered include Generative AI and its Applications, Leveraging the power of generative modeling to build innovative products, Building and deploying GPT-powered applications Demystifying ChatGPT, its architecture, training methodology, and business applications, and Applications of ChatGPT. {*Areas mentioned above are subject to change}

- Advanced computer vision & deep learning.

- In-depth knowledge & practical skills focus.

- Topics: image formation, CNNs, object detection.

- Image segmentation, generative models.

- Optical character recognition covered.

- Distributed & parallel computing.

- Explainable AI (XAI) exploration.

- Deep learning model deployment skills.

- Advanced ML algorithms for language data.

- Focus on natural language understanding.

- Feature engineering exploration.

- Natural language generation skills.

- Automated speech recognition is covered.

- Speech-to-text, text-to-speech conversion.

- Voice assistance device development.

- Building Alexa skills emphasis.

- RL core concepts exploration.

- Problem-solving skills in RL.

- Strategies in Python, TensorFlow.

- Theoretical RL foundations learning.

- Practical RL algorithm application.

- Proficiency in diverse applications.

- Effective use of RL in scenarios.

- Transformer role in modern AI comprehension.

- Neural networks for generation tasks analysis.

- Different generative model types: VAEs, GANs, transformers, autoencoders.

- Appropriate scenarios for diverse AI models.

- Attention mechanisms' role and efficacy.

- Analyzing popular generative models: GPT, BERT.

- Contrasting model architectures and objectives.

Contact Us

1800-212-7688

( Toll Free )

Skills Covered

- Generative AI

- Prompt Engineering

- ChatGPT

- Explainable AI

- Conversational AI

- Large Language Models

- Supervised and Unsupervised Learning

- Model Training and Optimization

- Model Evaluation and Validation

- Ensemble Methods

- Deep Learning

- Natural Language Processing

- Computer Vision

- Reinforcement Learning

- Speech Recognition

- Machine Learning Algorithms

Industry Project

- Project 1

Ecommerce

Develop a shopping app for an e-commerce company using Python.

- Project 2

Food Service

Use data science techniques, like time series forecasting, to help a data analytics company forecast demand for different restaurant items.

- Project 3

Retail

Use exploratory data analysis and statistical techniques to understand the factors contributing to a retail firm's customer acquisition.

- Project 4

Production

To understand their overall quality and sustainability, perform a feature analysis of water bottles using EDA and statistical techniques.

- Project 5

Real Estate

Use feature engineering to identify the top factors that influence price negotiations in the homebuying process.

- Project 6

Entertainment

Perform cluster analysis to create a recommended playlist of songs for users based on their user behavior.

- Project 7

Human Resources

Build a machine learning model that predicts employee attrition rate at a company by identifying patterns in their work habits and desire to stay with the company.

- Project 8

Shipping

Use deep learning tools, such as CNN, to automate a system that detects and prevents faulty situations resulting from human error.

- Project 9

BFSI

Use deep learning to construct a model that predicts potential loan defaulters and ensures secure and trustworthy lending opportunities for a financial institution.

- Project 10

Healthcare

Use distributed training to construct a CNN model capable of detecting diabetic retinopathy and deploy it using TensorFlow Serving for an accurate diagnosis.

- Project 11

Healthcare

Leverage deep learning algorithms to develop a facial recognition feature that helps diagnose patients for genetic disorders and their variations.

- Project 12

Automobile

Examine accident data involving Tesla’s auto-pilot feature to assess the correlation between road safety and the use of auto-pilot technology.

- Project 13

Tourism

Use AI to categorize images of historical structures and conduct EDA to build a recommendation engine that improves marketing initiatives for historic locations.

Disclaimer - The projects have been built leveraging real publicly available data-sets of the mentioned organizations.

Program Advisors and Trainers

Program Advisors

Program Trainers

Simon Travasoli

25+ years of experienceSenior Data Science Consultant at Citi Bank

Rocky Jagtiani

24+ years of experienceAI and ML Trainer

Nikhil Garg

16+ years of experienceData Science Instructor

Nitin Gujral

15+ years of experienceFounder at Cramlays

Career Support

Simplilearn JobAssist Program

Simplilearn Job Assist program is an India Specific Offering in partnership with IIMJobs.The Program offers extended support to certified learners to land their dream jobs.IIMJobs Pro-Membership of 6 months for free

Resume building assistance to create a powerful resume

Spotlight on IIMJobs for highlighting your profile to recruiters

Join the AI and ML industry

AI, the revolutionary digital frontier, has far-reaching impacts on business and society. With the projected exponential growth of the AI market, this course is ideal for individuals striving to stay ahead of this transformative trend!

Expected global AI market value by 2027

Projected CAGR of the global AI market from 2023-2030

Expected total contribution of AI to the global economy by 2030

Companies hire AI engineer

Batch Profile

These AI and ML courses cater to working professionals across industries. Learner diversity adds richness to class discussions and interactions.

- The class consists of learners from excellent organizations and diverse industriesIndustryInformation Technology - 43%Software Product - 13%Manufacturing - 20%Pharma & Healthcare - 7%BFSI - 7%Others - 10%Companies

- The class maintains an impressive diversity across work experience and rolesDesignation BreakupAssociate - 12%Midlevel - 35%Senior-level - 43%Executive - 10%Total Years of ExperienceLess than 3 years - 13%3-5 years - 17%5-8 years - 12%8+ years - 58%

- The class has learners with varied educational qualifications from excellent institutionsQualificationBachelors - 65%Masters - 30%Others - 5%Universities

Alumni Reviews

As a software engineer, I was working on application development using Oracle forms, SQL, and database languages. I wanted to explore better technologies in the market and got to know that Machine Learning and Big Data revolved around SQL. When I started looking for training institutes, Simplilearn was referred to me. What I liked most about Simplilearn’s AI and ML program was the context of the training and student-to-trainer engagement.

Aditya Shivam

Manager

What other learners are saying

Admission Details

Application Process

Candidates can apply to this AI and ML Course in 3 steps. Selected candidates can join the program by paying the admission fee.

Submit Application

Tell us why you’d want to do this AI and ML Course

Application Review

An admission panel will shortlist candidates based on their application

Admission

Selected candidates start the AI and ML Course in 1-2 weeks

Eligibility Criteria

Admission to this Post Graduate Program in AI ML Course requires:

Admission Fee & Financing

The admission fee for this AI and ML Course is ₹ 1,89,999 (Incl. taxes). It covers applicable program charges and Purdue Alumni Association membership.

Financing Options

We are dedicated to making our programs accessible. We are committed to helping you find a way to budget for this program and offer a variety of financing options to make it more economical.

Easy Financing Options

We have partnered with the following financing companies to provide competitive finance options at as low as 0% interest rates with no hidden cost.

Other Payment Options

We provide the following options for one-time payment

- Internet

Banking - Credit/Debit

Card

₹ 1,89,999

(Incl. taxes)

Apply Now

Program Benefits

- Program certificate from Purdue University and Simplilearn

- Exposure to generative AI, explainable AI, ChatGPT and more

- Understand the applications of prompt engineering

- Purdue Alumni Association Membership eligibility

- Rigorous curriculum delivered by industry experts

Program Cohorts

Next Cohort

Date

Time

Batch Type

- Program Induction

25 Apr, 2024

18:30 IST

- Regular Classes

25 May, 2024 - 25 Nov, 2024

18:30 - 22:30 IST

Weekend (Sat - Sun)

Other Cohorts

Date

Time

Batch Type

- Program Induction

14 May, 2024

19:00 IST

- Regular Classes

22 Jun, 2024 - 15 Dec, 2024

19:00 - 23:00 IST

Weekend (Sat - Sun)

AI ML Certification FAQs

Simplilearn’s Post Graduate Program in AI ML Course has been ranked as the best AI and ML course in India by TechGig. Simplilearn has been titled the #1 AI ML Certification Course, based on the following criteria, i.e., depth of curriculum, interactive hands-on learning, program recognition, and student experience.

Machines that simulate "mentally intellectual" capabilities that people identify with the human brain, such as learning, decision making, and problem-solving, are commonly referred to as AI. Healthcare, banking, entertainment, commerce, online shopping, and other industries have all embraced AI, making it a profitable area. A world-renowned credential (particularly from a reputable university) functions as a career stimulant for grabbing the most excellent possibilities in the area.

The study and development of computer systems that can execute activities previously confined to human intellect, such as decision-making and voice recognition, is AI. Machine Learning is a related area that studies how to teach computers to learn and adapt without being explicitly programmed. These closely related disciplines of study are at the bleeding edge of technology and in great demand, so AI ML Certification provide such a huge potential for students.

Machine learning techniques, understanding NLP, and expertise in deep learning algorithms are key technologies you must master to develop AI skills. Simplilearn’s AI and ML course in Mumbai includes all of these and more and will help you become an AI and Machine Learning expert in 12 months!

Yes, you are supposed to have a bachelor’s degree with an average of 50% (or higher) if you wish to enroll in these AI and ML course in Mumbai.

You will get a Purdue Alumni Association Membership.

You can join the AI and ML course in Mumbai even if you do not belong to a technical background. However, having a basic knowledge of programming languages and mathematics will be beneficial.

Can I enroll in an AI and ML course in Mumbai if I don't have any prior knowledge in both AI and Machine Learning?

Yes, you can enroll in the AI and ML course in Mumbai even if you don’t have any prior knowledge in AI and machine learning since this course will take you through the fundamentals to the top of the ladder, where you learn all the advanced critical AI and machine learning skills.

Simplilearn’s AI and ML course in Mumbai will help you learn a dozen of top skills and tools including, Python, Keras, Tensorflow, Django, and so much more. Not just that, you also get access to capstone in 3 domains and over 25 hands-on industry projects to implement and practice the skills you have learned during the program.

Simplilearn and Purdue University have collaborated to bring you an extraordinary learning experience. A high-engagement classroom learning environment meets the ease and convenience of a digital platform. In this AI and ML course in Mumbai, you will learn 30+ tools and skills, get masterclasses from Purdue faculty, perform capstone and other industry projects. And all of this happens in a cohort format where you learn alongside equally-driven professionals faster and better.

Anyone who has a keen interest and a passion for new-age tech skills can enroll in this AI and ML course in Mumbai. However, you are expected to have a bachelor's degree with a minimum of 50% marks. On the other hand, it is also beneficial to have a basic understanding of programming languages and mathematics.

Yes, even after completing the AI and ML Certification, you may access the course content.

We have a team of dedicated admissions counselors who can guide you as you apply for these AI and ML course in Mumbai.

What are the eligibility criteria for the Professional Certificate Program in AI and Machine Learning in Mumbai?

For admission to the Professional Certificate Program in AI and Machine Learning in Mumbai, you should have:

- A bachelor's degree with an average of 50% or higher marks

- Basic understanding of programming concepts and mathematics

- 2+ years of experience (preferred)

What is the admission process for this Professional Certificate Program in AI and Machine Learning in Mumbai?

The admission process consists of three simple steps:

- All interested candidates are required to apply through the online application form

- An admission panel will shortlist the candidates based on their application

- An offer of admission will be made to the selected candidates and is accepted by the candidates by paying the program fee

To ensure money is not a barrier in the path of learning, we offer various financing options to help you find this Professional Certificate Program in AI and Machine Learning in Mumbai more affordable. Please refer to our “Admissions Fee and Financing” section for more details.

What should I expect from the Purdue Professional Certificate Program in AI and Machine Learning in Mumbai?

As a part of this Professional Certificate Program, in collaboration with IBM, you will receive the following:

- Purdue Professional Certificate Program certification

- Industry recognized certificates from IBM and Simplilearn

- Purdue University Alumni status

- Lifetime access to all (the core) of the e-learning content created by Simplilearn

- $1,200 worth of IBM Cloud credits for your personal use

- Access to IBM Cloud platforms featuring IBM Watson and other cloud services

Upon successful completion of the program in Mumbai, you will be awarded a Professional Certificate Program in AI and ML certification by Purdue University. You will also get industry-recognized certificates from IBM and Simplilearn for the courses on the learning path after successful course completion.

After you pay the first installment of the AI and ML Course Online program price, you'll get admission to AI ML course sessions, including 8 to 10 hours of self-paced educational video content. Before joining the first class, you must complete the prescribed course.

Our teaching assistants are a devoted team of experts to ensure you pass the AI ML certification exams on your first try. From class enrollment through project mentorship and employment aid, they actively engage and effectively contact you to ensure that the course plan is followed and to help you expand your experiential learning.

Our AI and Machine Learning professors are all industry specialists with years of expertise in the field. Before they are qualified to train for us, they have undergone a thorough selection procedure that includes profile assessment, technical examination, and a training presentation. We also ensure that only instructors with a high alumnus rating stay on our AI and ML Course staff.

Simplilearn’s JobAssist program is an India-specific offering in Partnership with IIMJobs.com to help you land your dream job. With the Job Assist program, we will offer extended support for the certified learners who are looking for a job switch or starting with their first job. Upon successful completion of the Professional Certificate Program in AI and Machine Learning in Mumbai, you will be eligible to apply for this program and your details will be shared with IIMJobs. As a part of this program, IIMJobs will offer the following exclusive services:

- IIMJobs Pro-Membership for 6 Months

- Resume Building Assistance

- Career Mentoring

- Interview Preparation

- Career Fairs

To participate in the JobAssist program, you need to:

- Be a graduate (engineering or equivalent)

- Complete our Professional Certificate Program in AI and Machine Learning in Mumbai successfully and earn the certificate upon completion

Simplilearn's AI ML course fee is cost-effective compared to full-time on-campus university post-graduate degree programs. We also offer easy financing options so that you don't have to pay the entire fee at once. Check out our AI and ML course fees in Mumbai below:

Course Mode Price AI and ML Course Fees Online Rs.2,85,000 No, the JobAssist program is a supplementary offering that comes along with the Professional Certificate Program in AI and Machine Learning. It will make your chances high to get hired by the top companies.

Simplilearn offers extended support for those who are looking for their first job or willing to switch their career through the JobAssist program. After completing the course, you will be eligible to get IIMJobs Pro-Membership for 6 Months. IIMJobs will give you exclusive services like resume-building assistance, career mentoring, interview preparation, and career fairs.

No, the JobAssist program is designed to help you find your dream job. It will maximize your potential and chances of landing a successful job, but individual results may vary. The final selection is always dependent on the recruiter.

Reach us via the form on the right side of any webpage on the Simplilearn website, pick the Live Chat option, or email support and assistance if you cannot access the AI and ML Certification.

We provide email, chat, and phone help around the clock. We have a trained team that offers on-demand support through our discussion board. Furthermore, even after completing ai ml courses online, you will have unlimited access to the discussion board and forum threads.

The AI job industry is booming, with worldwide revenues expected to reach $191 billion by 2024. According to a World Economic Forum estimate, AI might create 58 million job oriented courses across several areas of the economy over the next few years. AI is already being used in various industries; thus, it makes perfect sense to acquire new skills and pursue an AI profession.

Participants must finish the AIML course coursework and the associated examinations and projects. Professionals will evaluate your class end exams, projects, and capstone projects. To get the AI and ML Certification, you must score over 75% on the examinations, finish the projects satisfactorily, and reply to the trainer's input.

In the domain of AI and machine learning, many of the most prominent career roles include:

- Machine Learning Engineer

- Deep Learning Engineer

- NLP Engineer

- Artificial Intelligence Engineer

- AI Architect

- Technical Product Manager

- Data Scientist

Take Your Skills to the Next Level: Explore More Computer Courses.

This extensive AI and ML Course was developed in collaboration with Purdue University and IBM. A Purdue University faculty member serves as your AI and ML Certification adviser, and you'll have optimal learning throughout the program. Purdue academics and IBM professionals will give masterclasses to assist you in obtaining a more profound knowledge of AI/ML concepts.

Professionals and students interested in becoming AI engineers should first study the fundamentals of AI, statistical analysis, and data modeling, as well as at least one scripting language like Python or R. After that, they should go on to more sophisticated topics like data processing, manipulation of data, Hadoop, and Computer vision. Deep learning and business analytics technologies such as Tableau or Qlikview can help you advance in your AI career.

AI experts with AI and ML Course training are best suited for industries like information technology, FinTech, healthcare, BFSI, and eCommerce.

Professionals with AI and Machine Learning skills are in great demand across various industries, including Google, Accenture, IBM, Amazon, and Microsoft.

This Post Graduate Program in AI and Machine Learning is non-credit and does not lead to a degree.

Post Graduate Programs are certification programs and do not include any transcripts for WES, this is reserved only for degree programs. We do not offer sealed transcripts and hence, our programs are not applicable for WES or similar services.

AI and Machine Learning courses are courses that teach the fundamentals and applications of artificial intelligence (AI) and machine learning (ML), as well bits of data science. This course typically covers topics like data analysis, algorithms, programming, AI and ML application development, and more. AI and ML courses are designed to give students the knowledge and skills needed to analyze data, design algorithms, develop AI and ML applications, and understand the implications of AI and ML on society.

The best AI and ML course is the Purdue AI ML Course. This comprehensive course covers all aspects of AI and ML, from foundational concepts to advanced applications, and provides hands-on projects and real-world case studies for students to gain practical experience. Additionally, Simplilearn offers support from instructors, mentors, and online forums to help students stay on track and answer any questions they have.

Learning ML and AI can be a daunting process, but there are a number of resources available to help you get started. One of the best ways to learn ML and AI is to take online courses from reputable institutions such as Simplilearn. Additionally, there are a number of books and tutorials that can provide an introduction to the concepts and techniques of ML and AI. It is also important to practice coding and to apply what you learn to real-world problems. Finally, networking with other AI and ML professionals can be a great source of knowledge and advice.

Simplilearn's AI ML certification is one of the best available because it provides an in-depth curriculum covering the essential concepts and tools needed to become a successful AI and ML professional. The certification includes courses on topics such as Machine Learning, Deep Learning, Natural Language Processing, and Computer Vision. It also includes hands-on projects that allow you to apply your knowledge to real-world problems. Additionally, the program is supported by experienced instructors who are experts in the field, providing guidance and assistance along the way.

Discover similar online certifications here.AI certificates can be worth it, depending on the course and the institution offering them. It is important to make sure that the course is from a reputable institution with international recognition and that the course is well-supported with resources and guidance. Additionally, an AI certificate from ai ml courses can help you build a strong foundational knowledge of AI concepts and techniques and demonstrate your expertise in the field to potential employers.

To become an AI and Machine Learning professional, you need to have a solid understanding of the fundamentals of AI and machine learning, including data analysis, data science, programming, algorithms, and AI and ML application development. You also need to have experience in working with AI and ML technologies, such as neural networks, natural language processing, and computer vision. Additionally, you may need to have knowledge of specific frameworks, such as TensorFlow or PyTorch, and a good understanding of mathematics and statistics. You can take AI and ML courses, attend boot camps, or pursue certifications in AI and ML to gain these skills.

The masterclasses will provide you with exposure to some of the latest trends in the AI space. Some of the areas covered include:

- Generative AI and its Applications

- Leveraging the power of generative modeling to build innovative products

- OpenAI and its Role in NLP and AI

- Building and deploying GPT-powered applications

- Demystifying ChatGPT, its architecture, training methodology, and business applications

- ChatGPT best practices, limitations, and avenues for future development

- Building real-world applications with the OpenAI API and ChatGPT

- Applications of ChatGPT, OpenAI, Dall-E, Midjourney & other tools

- Explainable AI

- Chatbots and their uses in companies such as Microsoft, Google, Meta etc.

- Latest trends in the AI space

*Areas mentioned above are subject to change

NLP courses in the program contribute significantly to a student's knowledge in AI and Machine Learning. NLP is a vital subfield of AI and Machine Learning that focuses on understanding and processing human language. By taking NLP courses, students develop expertise in building NLP models, extracting meaning from text data, and creating language-centric AI applications.

Find AI & Machine Learning Programs in Mumbai

Professional Certificate Course in AI and Machine Learning- Disclaimer

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.